Creating a WASM Library with Embind

I've been working on my Test Jam project for a while. I had previously tried to do a WASM wrapper around my C/C++ build, but I ran into a memory leak which existed outside the C/C++ code. Instead of reading the manual, I rewrote the code in TypeScript. After the rewrite, I read the manual and learned what was the source of the leak. I then rebuilt my WASM wrapper and ended up with a TypeScript and WASM implementation. I then decided to start comparing the two implementations to see what the benefits and drawbacks are. I also wanted to test some well touted claims about WASM (e.g. performance, code reuse) in the context of a library. So I started poking and prodding, and then I created this post.

Performance

We'll start off with the typical performance stuff first. For this test, I'm going to test several methods: one which returns a random number, one which returns a random ASCII string (with any ASCII character), and one which returns a random URL. These three methods are meant to show off a few different aspects.

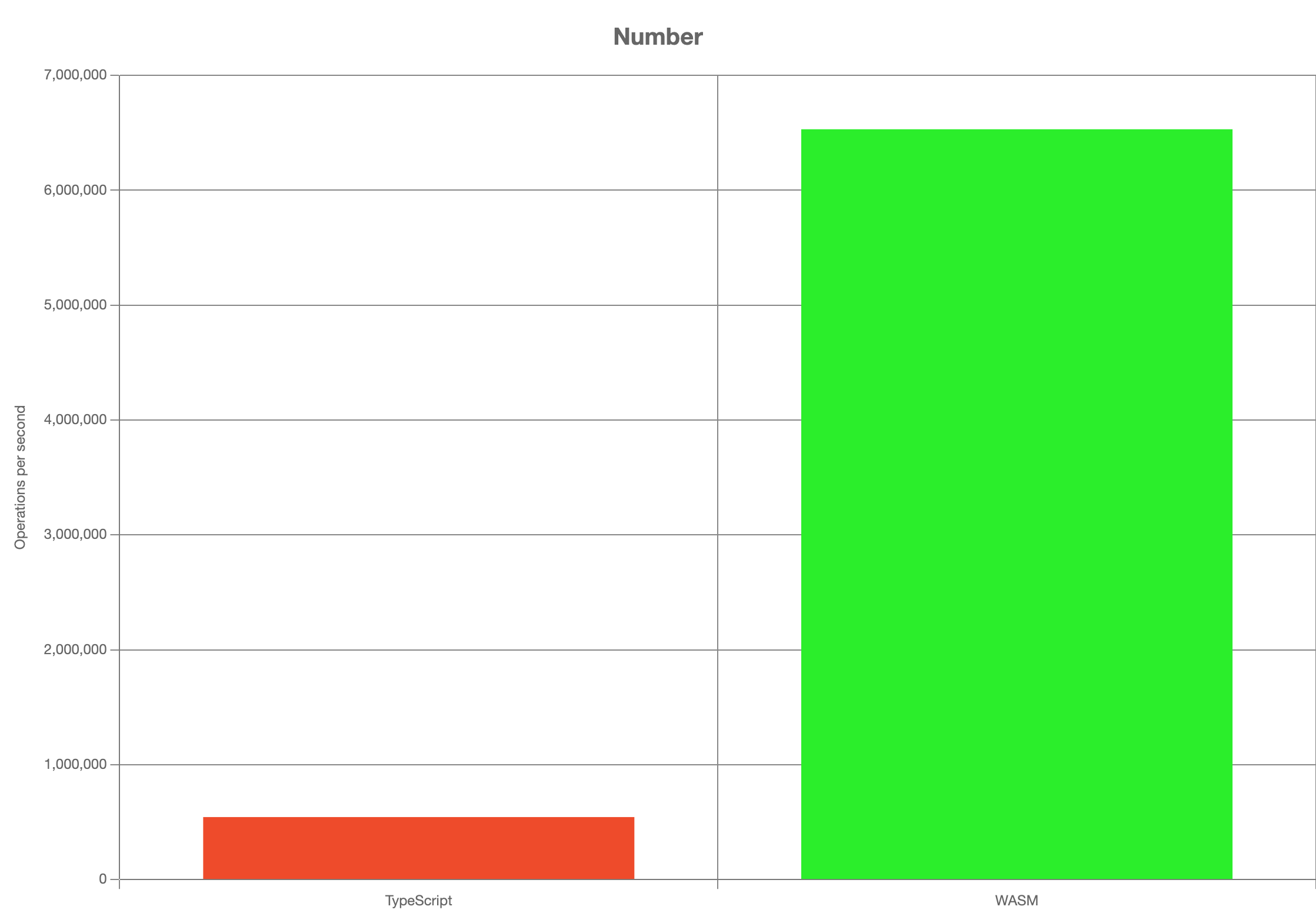

Ideal Case: Numbers

The random number method is the ideal WASM use case. WASM works really well when transferring numbers that can be converted into a 64-bit floating point number. There's littler overhead, and it's supposed to be fast. I'm using the TinyMT algorithm for both, with the native C implementation for WASM and my own port for TypeScript/JavaScript. The results are shown in Figure 1. For all benchmarks, we're measuring in terms of operations per second. The higher the number the better. Also, TypeScript will always be on the left and WASM will always be on the right.

When WASM returns data, it returns raw memory, and the host code (in this case JavaScript) must convert that memory into something useful, and then it must tell WASM "hey I'm done with the memory, clean it up please". In our case, we're just returning a double, which JavaScript just copies into a double, so barely any work was done.

But what if we returned a string?

Returning random strings

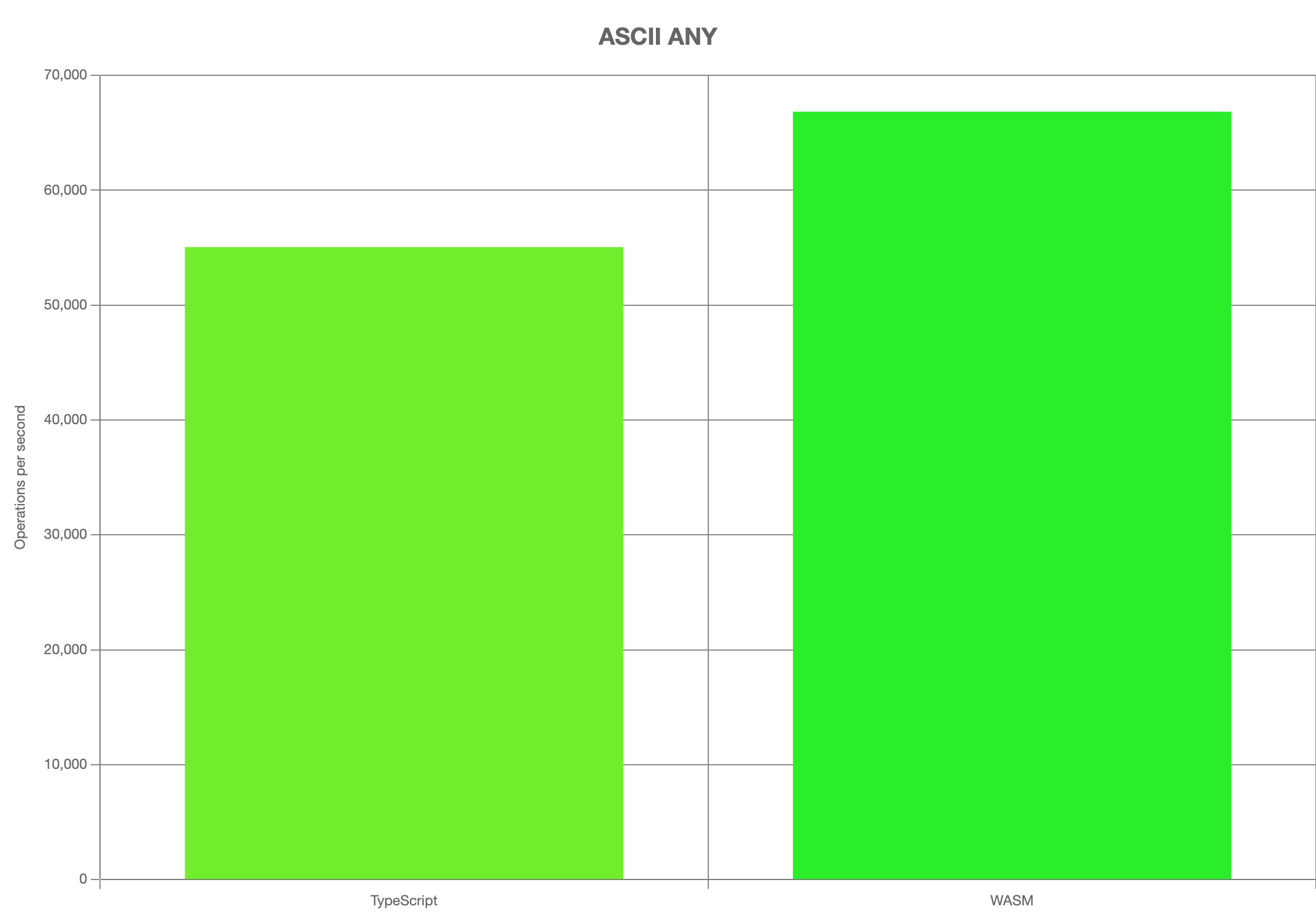

Specifically, we're returning a C++ std::string which uses 8-bit characters. Which means we're either using ASCII strings or UTF-8 strings. However, JavaScript uses UTF-16 strings. This means that we need to convert our 8-bit C++ strings to JavaScript's 16-bit strings. Emscripten does this for us automatically (though it does assume UTF-8). However, it is a runtime cost. I'm not worried too much about language choice impacting the runtime cost since other ASCII/UTF-8 languages will have a similar cost.

We can see the difference if we actually read the string. I chose to do that by using a TextEncoder to encode both strings. This allows us to see both the string generation time and the string access time. The results are in Figure 3.

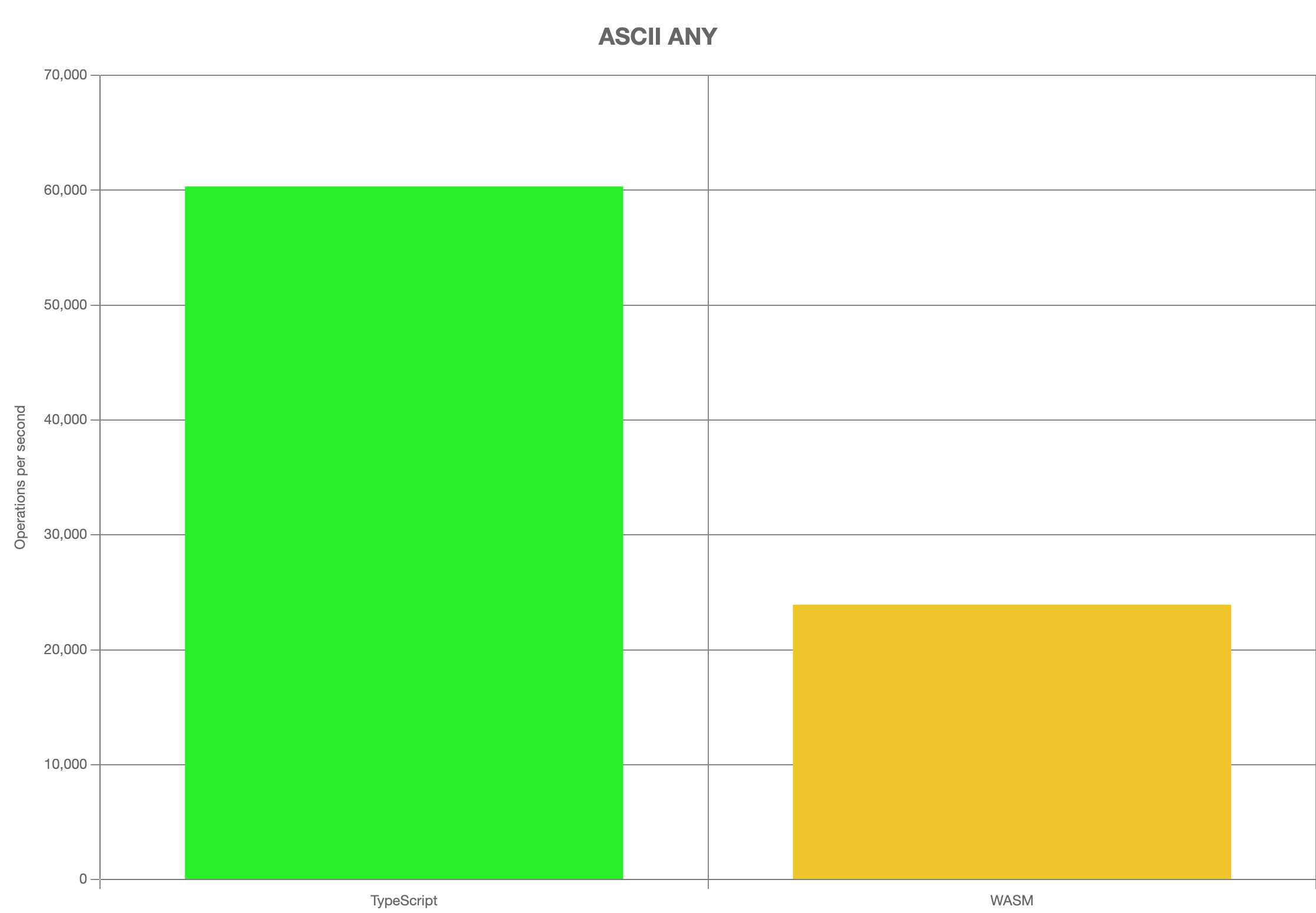

It's also not necessarily a typical comparison. Generally I would have implemented my WASM code using std::stringstream, but I wanted to push myself so I used preallocated buffers and had optimizations to lower heap allocations wherever I could. If I switch to std::stringstream (how I'd normally write it), then TypeScript will take the lead again, as shown in Figure 4. TypeScript has around 60,000 operations per second while WASM is only 25,000 operations per second.

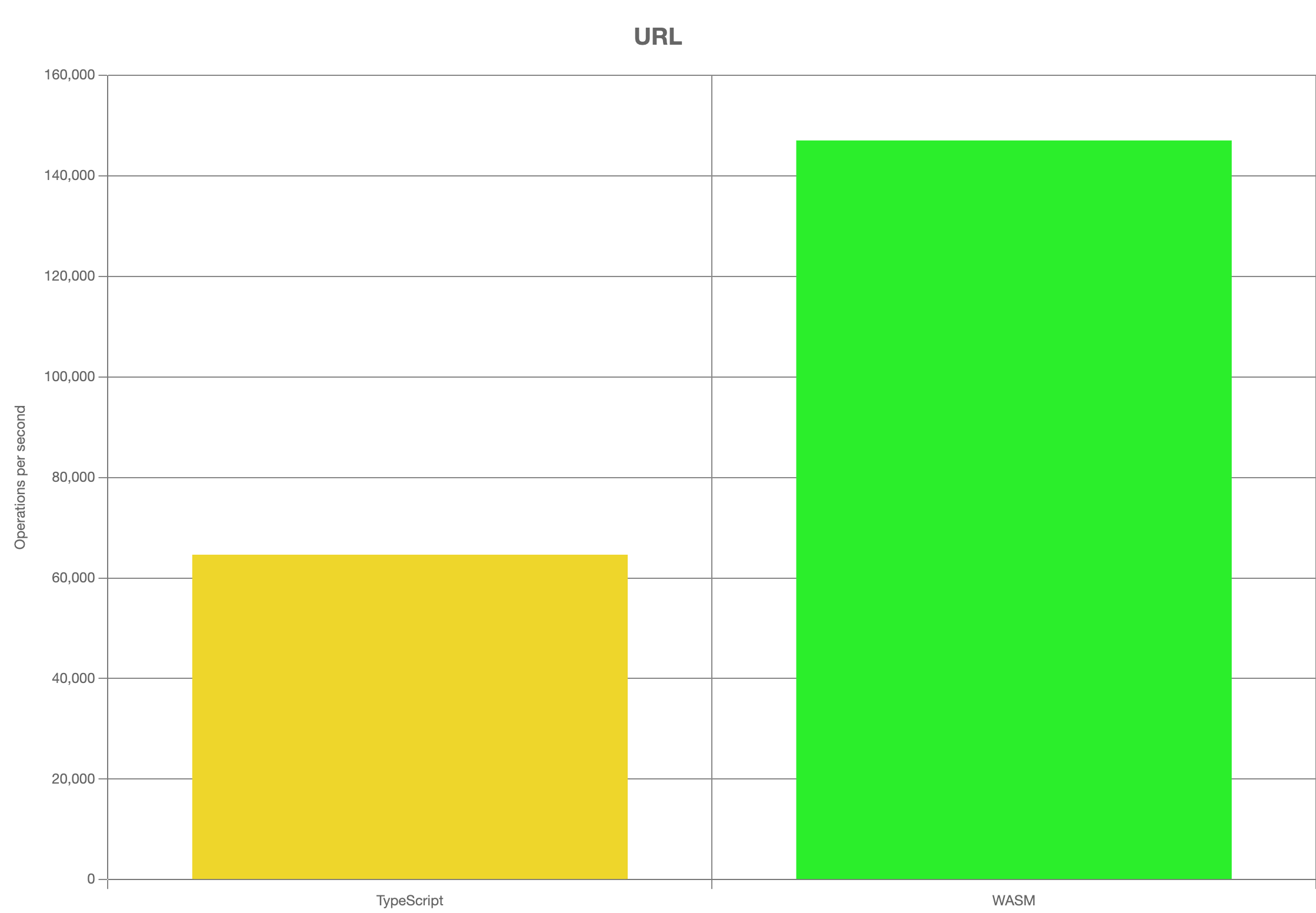

Returning Random URLs

Now onto our final benchmark, the URL generation. My random ASCII string code is just a simple loop, so it's pretty easy for V8 to optimize. I wanted to see how well JavaScript and WASM compared when there was a moderate amount of complexity. The results are shown in Figure 5. TypeScript has 65,000 operations per second, while WASM has 145,000 operations per second. Again, WASM code is optimized to minimize heap usage.

Memory Management

One of the benefits of WASM is the ability to reuse native code. Which sounds great, but there is an issue with that. WASM has a memory model that doesn't play nice with JavaScript, so reusing native code through WASM can be difficult.

WASM code is given a chunk of memory, and then it is up to the WASM developer to decide how to manage that memory. When WASM returns a value to JavaScript, what it returns is a reference to a chunk of memory. WASM code then needs to keep that memory address valid so long as JavaScript is using it. In C/C++, this is done by not calling free, and in Rust it's done by turning off the borrow checker (e.g. via unsafe). In return, JavaScript will let WASM know when it's done with that memory, that way WASM can free it. In Embind this is done by calling a delete method.

This sounds simple, until we realize that this means doing manual memory management in a garbage collected language. What's more, this is manual memory management inside of JavaScript - a language known for weird quirks and behaviors. Furthermore, WASM heaps tend to be limited in size, so it's quite possible for WASM code to run out of memory while the JavaScript engine still has memory! This makes managing WASM memory even more complicated. So, let's look at some different strategies for dealing with WASM memory, and the quirks to look out for.

Strategy 1: Manual Memory Management

The first approach is for the JavaScript developer to use their inner C developer and clean up the memory manually. Because JavaScript can throw (and throws are non-obvious), all delete calls should be done in a try/finally like so:

const wasmObj = new myWasmModule.MyCppClass()

try {

/// Do something with wasmObj ...

}

finally {

wasmObj.delete()

}

For synchronous code, this seems simple enough (other than nested try/finally hell). However, the biggest gotchas with tihs approach are async code (promises, callbacks, etc.) and generator functions. Consider the following async code:

const textProcessor = new myWasmModule.TextProcessor()

try {

const results = await Promise.all([

fetch(myUrl1).then(res => res.text()),

fetch(myUrl2).then(res => res.text())

])

return results.map(res => textProcessor.process(res))

}

finally {

textProcessor.delete()

}

If one of the fetch calls hangs, then the WASM memory will stay around indefinitely. This is true of any async operation. The solution is to not allocate memory before queuing an async operation. Instead, allocations should only done inside the synchronous step and then be immediately deallocated.

const results = await Promise.all([

fetch(myUrl1).then(res => res.text()),

fetch(myUrl2).then(res => res.text())

])

return results.map(res => {

const textProcessor = new myWasmModule.TextProcessor()

try {

textProcessor.process(res)

}

finally {

textProcessor.delete()

}

})

Alternatively, all async operations could have timeouts and limits to make sure that they are guaranteed to complete in a reasonable amount of time. Just be careful to not create too many pending memory allocations at once --- otherwise WASM could run out of memory!

Generator functions also add a few additional wrenches into the mix. See if you can find the bug in the following code.

function* myGen() {

const queue = new wasm.QueueOfRandomNumbers()

try {

for (; !seq.empty(); queue.pop()) {

yield queue.front()

}

}

finally {

queue.delete()

}

}

function first(seq) {

for (const v of seq) {

return v

}

}

console.log(first(myGen()))

It looks correct (we're wrapping with a try/finally), but the above code will leak memory. The reason is that generators suspend execution whenever they yield. Code inside a finally block is only called when the generator finishes with a return or a throw. It is never called on a yield.

Since our generator only reaches a yield and never finishes, our finally block never runs. The garbage collector will eventually clean up our generator's stack, but when it does it will never reactivate the code. It'll end up deleting our pointer to WASM memory without ever calling our delete method and it will cause a memory leak! I haven't found a good way to manually clean up memory with generators. For now, my recommendation is to just avoid allocating WASM memory inside a generator itself.

The manual memory management approach is fraught with peril. We have to be extremely careful with how and where we clean up memory. Manually freeing is a style some C developers may like, but most JavaScript developers want something a little more automated. So let's look at something else.

Strategy 2: FinalizationRegistry

Having a way to clean up WASM memory when the JavaScript garbage collector runs would be nice. One way to (theoretically) do this would be to use the FinalizationRegistry. The idea behind the FinalizationRegistry is that you can register a method to run when an object is cleaned up, that way we could perform extra cleanup steps. Some example code is below:

// JavaScript code

const mod = require('./cpp-wasm-output')

const maxIter = 3

const wasmRegistry = new FinalizationRegistry((val) => { val.delete() })

function str() { return {str: new mod.MyString()} }

for (let i = 0; i < maxIter; ++i) {

const val = str()

wasmRegistry.register(val, val.str)

}

And here's the C++ code:

// C++ Code

#include <string>

#include <cstdio>

#include <emscripten/bind.h>

using namespace emscripten;

struct MyString {

std::string value;

MyString() : value(99999UL, 'a') {

printf('initializing\n');

}

~MyString() {

printf('cleaning up\n');

}

};

EMSCRIPTEN_BINDINGS(Demo) {

class_<MyString>("MyString")

.constructor()

.property('value', &MyString::value);

}

We're just wrapping a C++ string and adding print statements when we create a string, and another when the string is cleaned up. This allows us to track when our WASM memory is allocated and cleaned up. Let's run the code. Below is my output.

initializing

initializing

initializing

cleaning up

cleaning up

There are only two "cleaning up" prints when there should be three. This is because the FinalizationRegistry feature is broken. A JavaScript engine can completely ignore FinalizationRegistry and still be standards complaint. Even when implemented, it's not reliable and can vary from runtime to runtime (i.e. Chrome will act differently than Firefox). It's frustrating that this feature is so promising on the surface, but so terrible in reality. Fortunately, there are other ways.

Strategy 3: Custom Garbage Collector

Instead of relying on the built-in garbage collector, let's make our own. Bram Wasti gives details how to create a custom garbage collector for WASM, and my garbage collector is a modified version of his. For making our garbage collector, we use WeakRefs instead of FinalizationRegistry. WeakRefs are references to objects in JavaScript memory, but they don't prevent the memory from being cleaned up. When the garbage collector cleans up the referenced objects, it will also update the associated WeakRefs to point to nothing. This allows us to detect if an object is cleaned up ourselves. Fortunately, WeakRefs are a well-defined and required part of the standard, so they won't be blatently ignored. Here is my version of a Garbage Collector:

const mod = require('./cpp-wasm-output') // Load WASM code

// used to allow terminating a node process

// not required for processes which never end (e.g. server, browser)

let stopGc = false

// tracks WASM allocations

const managed = {};

(async function garbageCollector() {

const forceCleanup = (key, cleanup) => {

cleanup()

delete managed[key]

}

const tryCleanup = ([key, [ptr, cleanup]]) => {

if (!ptr.deref()) {

forceCleanup(key, cleanup)

}

}

// Main GC loop

while (!stopGc) {

Object.entries(managed).forEach(tryCleanup)

await new Promise(res => setTimeout(res, 10))

}

// Exiting program, ensure proper cleanup

Object.entries(managed).forEach(forceCleanup)

})();

// For production, replace with better ID generation

let id = 0

function manage(obj, cleanup) {

managed[id++] = [new WeakRef(obj), cleanup]

}

// Wrap WASM class

class MyString {

constructor() {

// Create our WASM object

const data = new mod.MyString()

// Add to our custom GC tracker

manage(this, () => data.delete())

// Add as a class property

this.data = data

}

str() { return this.data.value }

}

// Do our iterations

async funciton f() {

const maxIters = 25

for (let i = 0; i < maxIters; ++i) {

const v = new MyString()

// Allocate a lot of memory to create memory pressure

// Without memory pressure, the GC won't update WeakRef's

Array.from({length: 50000}, () => () => {})

// Also, since our GC is on a timer we need to wait

await new Promise(res => setTimeout(res, 100))

}

}

// Call f() and then tell the GC to end the program

f().then(() => stopGc = true)

There is still wonky behavior due to how V8's garbage collector works. Some of the time it cleaned up references mid-run, other times it cleaned up everything as the process was closing. Not only that, but the garbage collector only runs when JavaScript's heap has lots of memory pressure, not when WASM heap has pressure. This makes it a lot harder to prevent WASM memory leaks when there's a lot of WASM allocations but very few JavaScript allocations. This means "out of memory" errors are still highly probable. All in all, there's not a lot of predictability with this strategy, so it can cause issues with heap-constrained WASM heaps.

Strategy 4: Copy into JavaScript

Another way to deal with WASM memory is to copy the data into JavaScript objects and then immediately free the WASM memory. It does take writing some wrapper code to do the copying, but the benefit is that the rest of the code is dealing with JavaScript objects like normal, so there's no need to worry about memory cleanup outside of the wrappers. The downside is performance. It also only really works well for one-way data objects (such as data generators). It doesn't work well if you are wanting to do back-and-forth requests on the same data (like call methods on a C++ class).

The code would look something like the following:

const mod = require('./cpp-wasm-output')

class MyString {

constructor() {

const data = new mod.MyString()

try {

// Embind converted data.value to a JS string

// so we don't have to deep copy the string

this.data = data.value

}

finally {

// Immediately clean up our WASM memory

data.delete()

}

}

}

const maxIters = 25

for (let i = 0; i < maxIters; ++i) {

const v = new MyString()

}

I have yet to find a perfect solution for WASM memory. There are some drawbacks to all of them. The custom garbage collector is pretty nice and would work for many use cases. To be truly universal it would need some work. For instance, we need a way for a failed WASM allocation to trigger a JavaScript garbage collector cleanup (which would then update our WeakRefs), trigger our custom garbage collector, and then retry our WASM allocation.

Lines of Code

One of the promises of WASM is that it will let us share code between backend and frontend (assuming your backend is written in a systems language). Which sounds great because that should mean less code on the frontend. So, I put it to the test and decided to measure the lines of code in my project. Though again, there is a caveat. For memory management I'm copying WASM memory into JavaScript memory, so I have quite the JavaScript/TypeScript wrapper. Another memory managment strategy may have less lines of code, but it may not be as easy to use.

For my lines of code calculations I used cloc. I also only measured the generator code since that's the only part of my library which used WASM (the rest of the code was just copy/pasted files, so no saving lines there). For the C++ column, I'm measuring the size of my bindings.cpp file (the file which defines my Embind bindings to JS). That file is necessary for the WASM compilation, so I'm counting it. Here's the results:

| TypeScript | WASM (Total) | WASM (TS Wrapper) | WASM (C++) |

|---|---|---|---|

| 1,413 | 1,683 | 1,241 | 442 |

The benefits of "code reuse" are a bit muddied now. On the one hand, I'm saving lines of code by simply porting C++ to JavaScript and not wrapping WASM. I'm also simplifying my build pipeline (Emscripten is really nasty, especially when combined with CMake and NPM), and I'm avoiding a lot of the memory headaches discussed above. I also get to take full advantage of the JavaScript/TypeScript.

But on the other hand, I'm replicating logic which means I need to keep my changes "in sync." I'm also having to translate between how languages believe code should be written. For instance, in C++ I have unsigned integers which makes using the TinyMT algorithm easy. In JavaScript I only have doubles, so I had to emulate unsigned arithmetic (see Unsigned Integers in JavaScript). These weird type of gotchas can make porting code difficult, and it can make maintaining changes even harder.

The other issue is that this section is one annectdotal data point with one memory management solution. So it's extremely hard to extrapolate to other projects.

For the "code reuse" benefit, I'm going to say it's shaky. I wouldn't ever propose WASM on only the grounds of "code reuse" --- especially since using the WASM code is pretty hard. But if there's a heavy C++ codebase, I wouldn't count it out either (e.g. a full video game). Yay for another "it depends" argument! But, if I'm honest, this one isn't looking so good for WASM.

Bundle Size

My next test was to take a look at bundle sizes. This is again a single data point, so don't extrapolate on the actual sizes (is it bigger or smaller). I'm looking at how well does the WASM code compress, not the actual size of the code. It turns out, compression for over-the-network communication matters a whole lot. Modern JavaScript engines are really good at turning code into execution very quickly, so I'm less worried about the parse/startup time and more worried about bandwidth. That said, there is a big caveat to what I'm doing.

I'm using Emscripten to create the WASM output, and Emscripten is known for filling in compatibility gaps (e.g. convert OpenGL to WebGL, provide POSIX polyfills, emulate pthreads, etc). Emscripten is not known for small bundle sizes (quite the opposite). So do keep in mind that another compiler or toolchain could lower the WASM sizes dramatically (e.g. Cheerp, Zig, WASI SDK, LLVM, etc.). Exact numbers only applies to Emscripten, not WASM as a whole. That said, since WASM is a standardized format, the compression ratios should apply regardless of where you're at. So, if you do get a smaller WASM build from something else, the GZip compression ratio should be roughly the same to what I'm getting.

For bundling everything to send to the browser, I used esbuild to create a single JS bundle for both of my implementations (TypeScript and TypeScript + WASM). This allows me to have a more apples-to-apples comparison between the two - even if it's not fully optimized. Here's my sizes (with no minification or compression):

| TypeScript | WASM |

|---|---|

| 886.4kb | 694.7kb |

| TypeScript | WASM |

|---|---|

| 410.kb | 624.3kb |

Now let's take a look at gziped sizes for our minified code. Here are my minified and compressed sizes:

| TypeScript | WASM |

|---|---|

| 100.7kb | 226.6kb |

This also highlights n issue that's really bugged me with a lot of WASM frontend code --- it's rather difficult to make WASM data transfer as fast as JavaScript data transfer. WASM code is at the mercy of the language's compiler toolchain for how big th efinal binary is, and it's really hard to get the final binary down in size. Not only that, but it's just not optimized for many of the common web compressions we have. Meanwhile, JavaScript is just text that can easily be manipulated after it's written, and it compresses a lot easier with standard web compression algorithms. Plus, a lot of effort has gone into tooling to make it smaller and more compressible with lots of different minifiers and build tools out there. WASM just hasn't had that level of investment.

Ending Thoughts

The main the promises of WASM I've heard is better performance and code reuse (especially with servers not written in Node). Those are both great ideas, but I haven't seen them really pan out.

Performance can be better, but it's not a guarantee. Going from WASM land to JavaScript land is slow. The more times it's needed, the smaller the performance gain will be. Also, the lack of compressibility could start causing loading issues and make initial site renders slower than equivalent JavaScript code.

Reusing code is also a mixed bag. The memory model brings manual memory management to an automatically memory managed language. The resulting code is overly complex, and it makes JavaScript feel a lot more like a poorman's C than a language for the web. It also requires that you have a server language that can be turned to WASM (and if you want speed then it better be a systems langauge).

I also haven't ever reused server code in the browser - even when using Node. The browser and server just have very different concerns. Plus HTMX makes it so that we don't need to run much (if any) code on the browser. So I really don't see the need to move native code to the browser.

The one place I could see use for WASM is wrapping native code without having to learn the addon/FFI model for all of the JavaScript runtimes now (e.g. Node, Deno, Bun, Vercel Edge Runtime, etc). It's also good for the C/C++ devs who just want to use existing code in the browser and don't mind manual memory management. Other than that, I can see why it hasn't taken off and why everything seems to just get rewritten in JavaScript, even when there's an open source C library that does the same thing. There's just no real benefit to WASM outside of a cool "native game demo in the browser."